LATEST ARTICLES

The new age of analytics fueled by hyper-converged storage platforms

Are you doing a lot of proof of concepts, or you’re trying to figure out different ways to optimize your infrastructure and decrease costs?

Hi, I’m Peter Nichol, Data Science CIO. Allow me to share some insights.

What is hyper-converged infrastructure?

Today, we’re going to talk about hyper-converged infrastructure, also known as HCI, what it can do, and the potential benefits.

HCI is a term that I came across over the last couple of weeks. I was curious to get more involved in exactly what it was and its capabilities related to data analytics. I envisioned appliances and other technologies with built-in processors like IBM Netezza (a data warehouse appliance) and others. I was interested in what precisely this hyper-converged infrastructure could potentially achieve.

Hyper-converged infrastructure combines compute storage and networking into a single, virtualized environment. This technology takes advanced compute, RAM, and storage which you’re already familiar with. These are all elements of a hyper-converged infrastructure. You can dynamically configure and allocate compute, RAM-based, and set up different networking configurations based on your needs, all within a single unit.

This single virtualized system uses a software-defined approach to leverage dynamic pools of storage, replacing dedicated hardware.

The benefits of hyper-converged infrastructure?

First, there is less risk of vendor lock-in. The rationale is that you’re not as susceptible to vendor lock-in because you have an easily swapped-out appliance. Second, HCI solutions offer public cloud agility with the control that you probably want from a solution hosted on-prem. Third, considering the total lifetime costs, running an HCI environment doesn’t cost that much because you have compute and storage and networking combined in a single unit.

Hyperconverged storage platforms players

Like many industrial markets, we have large players that control a considerable percentage of this hyper-converged storage platform market. These are the major industry players. Of course, there are hundreds of more niche players, but these staples provide a good starting point to understand the capabilities offered.

Major hardware players

- HPE/SimpliVity

- Dell EMC

- Nutanix

- Pivot3

Major software players

- VMware (vSAN)

- Maxta

HPE, Nutanix, and Pivot3 provide a single virtualized environment that can be leveraged almost out-of-the-box. And when you think about how these really can be optimized, let me give you a couple of different use cases where these are most efficient.

- High throughput analytics: When your business requires high compute capabilities and demand faster compute processing, this type of integrated solution offers many possibilities. Typically, this business case is best applied when heavy data processing needs can benefit from having the storage and compute very close together. This can be a significant advantage for the end-users perception of application and visualization performance.

- Virtualized desktops: Running virtualized desktop often requires a wide range of scalability. Over the weekend, for example, you might run 25% of your normal nodes, whereas, during the week, you might have peak times where you’re running 125% of the average weekly for computing. Based on the number of users and volume of workgroups your enterprise supports, the ability to rapidly scale up and down can be advantageous.

- COTS specifications for performance: much larger out-of-the-box solutions, like SAP or Oracle, typically have pre-determined specifications in terms of computing, storage, and networking that are required to run the environment to performance standards. Using HCI type of environments is a great way to ensure the setup needed for optimal performance. Once the standard specifications are known, you can scale your hyper-converged infrastructure directly to those needs or overbuild to ensure you’ll hit or exceed performance targets/

What’s in the market today for HCI?

There are hundreds of products that offer similar but not the same functionality. Therefore, it’s essential to consider how each technology component extends existing capabilities before adding technology into an already complex ecosystem. All too often, leaders end up adding technologies because they are best-in-class and end up duplicating technologies in their architecture stack that perform near-identical functions.

- Nutanix Acropolis has five key components that make it a complete solution for delivering any infrastructure service:

- StarWind is the pioneer of hyper-convergence and storage virtualization, offering customizable SDS and turnkey (software+hardware) solutions to optimize underlying storage capacity use, ensure fault tolerance, achieve IT infrastructure resilience and increase customer ROI.

- VxRail, As the only fully integrated, preconfigured, and pre-tested VMware hyper-converged infrastructure appliance family on the market, VxRail dramatically simplifies IT operations, accelerates time to market, and delivers incredible return on investment.

- VMware vSAN is a software-defined, enterprise storage solution powering industry-leading hyper-converged infrastructure systems.

- IBM CS821/CS822: IBM Hyperconverged Systems powered by Nutanix is a hyper-converged infrastructure (HCI) solution that combines Nutanix Enterprise Cloud Platform software with IBM Power Systems.

- Cisco HyperFlex: Cisco HyperFlex. Extending the simplicity of hyper-convergence from core to edge and multi-cloud.

- Azure Stack HCI: The Azure Stack is a portfolio of products that extend Azure services and capabilities to your environment of choice—from the data center to edge locations and remote offices. The portfolio enables hybrid and edge computing applications to be built, deployed, and run consistently across location boundaries, providing choice and flexibility to address your diverse workloads.

- Huawei FusionCube BigData Machine is a hardware platform that accelerates the Big Data business and seamlessly connects with mainstream Big Data platforms. The BigData Machine provides the high-density data storage solution and Spark real-time analysis acceleration solution based on Huawei’s innovative acceleration technologies.

Each of these technologies adds something specific in terms of functionality and capability extension. Consider which products are already part of your infrastructure and integrate well with potential new technology additions.

Advantages to running an HCI

Hyper-converged infrastructure can offer companies huge benefits and aren’t all associated with pure performance gains.

First, when using hyper-converged infrastructure, you don’t need as many resources to maintain and support the environment. Second, an HCI environment is less complex and more simplified; this removes the conventional layers of microservices that commonly require technical specialization. Third, by eliminating the need for technology resource specialization, maintenance, support, and enhancement resources decrease, adding to cost savings.

Second, there is a financial benefit by having the compute and storage very close together. Almost always, of course, depends on the specific business case; there will be cost-saving realized from this coupled architecture.

Third, major business transformations can be more easily supported. For example, let’s assume that the business pivots and traffic increases by 40%. Usually, this would be beyond what would be generally supported for elastic growth, and therefore there would be a performance hit. However, because this technology is plug-and-play, it’s easy to swap out an existing appliance for a newer appliance with improved capabilities. Of course, these applications can auto-scale up to a limit, but swapping out appliances is a viable option if that limit is hit.

As you consider starting up proof of concepts (POCs) and exploring different ways to provide value to your business customers, evaluating hyper-converged technologies and infrastructures might be a safe way to ensure that performance guarantees are achieved.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!

The play for in-storage data processing to accelerate data analytics

Is there a business case for in-storage data processing? Of course, there is, and I’m going to explain why.

Hi, I’m Peter Nichol, Data Science CIO.

Computational storage is one of those terms that’s taken off in recent years that few truly understand.

The intent of computational storage

Computational storage is all about hardware-accelerated processing and programmable computational storage. The general concept is to more data and computers closer together. The idea is that when your data is far away from your compute, it not only takes longer to process, but it’s more expensive. This scenario is common in multi-cloud environments where moving and erasing data out is a requirement, but that requirement comes at a very high cost. So the closer we can move that data to our compute power, the cheaper it will be, and ultimately, the faster we will be able to execute calculations.

Business cases for computational storage

The easier way to understand computation storage is to observe a few examples. These concepts are primarily embedded in startups and are most commonly known as “in-situ processing” or “computational storage.”

First, let’s focus on an example around hyperscalers. Hyperscale is used to do things like AI compute, high throughput video processing, and even composable networking. When we observe organizations like Microsoft, they are incorporating these technologies into their product suites. For example, Microsoft is using computation storage in their search engines with the application of use field-programmable gate arrays (FPGAs). The accelerated hardware enables search engines and can provide those credentials and analytical results in less than microseconds. Also, these capabilities are expanding into other capabilities like Hadoop MapReduce using DataNodes for storage and processing.

Second, architectures that are highly distributed are very effective. Hyperscale architectures build a great foundation to scale computational compute capabilities. The concept of segmenting hardware to software is not new. Even AWS Lambda—typically a data streaming capability—we can deconstruct an application to break out data flow into several parts. This makes managing multiple data streams much more streamlined. For example, data feeds can be individually ingested into a data stream, then AWS Lambda can manage the data funnel from AWS Lambda into computational storage. Once that stream is fed into computational storage, that data stream is more efficient and capable of executing instruction even faster than if not fed into computational storage.

Why look at computational storage now?

Do we as leaders even care about computational compute? Yes, we do. Here’s why.

Looking over the last year or even the previous decade, the way data is stored is designed and architected based on how CPUs are designed to process that data. That was great when the hardware designs aligned to the way data was processed. But, unfortunately, how CPUs were designed and architected over the last ten years has dramatically changed. And as a result, we need to change how we store data to process it more effectively, faster, and cheaper.

The industry trend to adopt computational storage

Snowflake is an excellent example of a product that separates the compute from the storage processing. This results in a perfect opportunity for business leaders to realize the benefits of separating computing from storage. This helps accelerate data read and processing cycle times. The advantage is that users experience faster application and interface responses with faster visualization and presentment of the data requested.

If you’re curious to research additional topics around computational storage, the Storage Networking Industry Association (SNIA) formed a working group in 2018 that has the charge to define vendor-agnostic interoperability standards for computational storage.

As you think about your technology environment and how you’re leveraging and processing data, consider how far your data is from your ability to compute that data and process it analytically. Data needs are growing exponentially, and the demand for computational storage will be tightly coupled to the need to display and visualize organizational data. Your organization might benefit from levering computational storage to connect high-performance computing with traditional storage devices.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!

Capturing the power of automation with DevOps

Are you trying to deliver more functionality into production at a faster cadence? Are users asking your team to prioritize time-to-market over quality? Do you have release cycles longer than 3-4 days? Do you constantly review and prioritize issues that continue to resurface?

You might be in a great position to benefit from DevOps.

Hi, I’m Peter Nichol, Data Science CIO.

DevOps integrates the development and operations activity of the software development process to shorten development cycles and increase deployment frequency. DevOps centers around a handful of critical principles. If you’re ever unclear about how DevOps might apply, bring yourself back to the fundamental principles. This helps to drive the intent behind the outcomes achieved by adopting and implementing DevOps.

DevOps critical principles

- Continuous delivery: constantly delivering useable functionality

- Continuous improvement: introducing a steady pace of improvements to existing models

- Lean practices: improving efficiency and effectiveness by eliminating process waste

- System-oriented approaches: focusing on conditions required for maximum system effectiveness

- Focus on people and culture: optimizing people and inspiring culture to drive positive outcomes

- Collaboration (development and operations teams): establishing a shared vision and shared goals to enable inclusion

DevOps automates interactions that otherwise might result in unplanned delays or bottlenecks.

What is DevOps, and where did it originate?

Today, we’re going to talk about DevOps—development operations. DevOps embraces the idea of automated collaborations to develop and deploy software.

The term “DevOps” was coined in 2009 by Patrick Dubois, the father of DevOps. DevOps is a combination of development and operations. It’s a kind of classic example of yin and yang:

- Developers want change

- Operations want stability

Combining development and operations sounds simple enough, but what elements make up development and operations? Let’s take a minute to define these terms clearly.

Development includes:

- Software development

- Build, compile, integrate

- Quality assurance

- Software release

- Support of deployment and production tasks as needed

Operations includes:

- System administration

- Provisioning and configuration

- Health and performance monitoring of servers, critical software infrastructure, and applications

- Change and release management

- infrastructure orchestration

What problem does DevOps solve?

Developers typically want to release more code into production, enabling increased user functionality. This results in an environment of constant change and often questionable stability. Meanwhile, operations is focused on stability and less so on changes. Operations wants to establish a stable and durable base for operational effects; i.e., supporting production-grade and commercial applications that don’t fare well with constant changes.

The conflicting objectives between development and operations create polarization of the team. This polarization creates a natural conflict. DevOps attempts to bridge this gap through automation and collaboration.

DevOps connects your development operations teams to generate new functionality while maintaining existing or commercial functionality in production. DevOps primarily has three goals that are targeted either when it’s first introduced into an organization or after an internal DevOps optimization.

DevOps goals:

- Continuous value delivery

- Reduction in cycle time

- Improved speeds to market

Value delivery validates that what’s developed is useful and has business adoption. Cycle-time reductions go hand in hand with an increase in deployment frequency. Lastly, speed to market looks at the demand intake process and reduces the time from the initial request to when usable functionality is available in production. These combined benefits drive the majority of use cases in support of DevOps.

DevOps benefits

The more time you spend exploring the benefits of DevOps, the more obvious how expansive the benefits derived from implementing and adopting DevOps are. Below I’ve listed several benefits; however, let me first expand on a few of my favorites:

- Continuous value delivery: as we automate these different processes through the development and operations lifecycle, we remove waste and provide the ability to achieve continuous value.

- Faster orchestration through the pipeline: once we start to automate, we reduce the manual handoffs. As a result, that functionality moves through, gets tested, and gets put into production much faster.

- Reduced cycle times: as we put functionality into production and automate it, the time it takes from start to finish begins to decrease. This results in the perception of increased value or a faster cadence of the value we can provide.

Here are some additional and expected benefits of adopting DevOps principles:

Benefits of DevOps

- Continuous software delivery

- Faster detection and resolution of issues

- Reduced complexity

- Satisfied and more productive teams

- Higher employee engagement

- Greater development opportunities

- Faster delivery of innovation

- More stable operating environments

- Improved communication and collaboration

How do we measure success?

It sounds like DevOps has benefits. That’s great. But how do we measure the success of DevOps efforts? Entire books have been written on this topic. There’s no lack of knowledge available that’s been published and even posted on the Internet. However, I boil down measuring DevOps success into three buckets:

- Performance

- Quality

- Speed

By starting with these anchors for managing success, you’re sure to have a simple model to communicate to your leadership. Although there are hundreds of other metrics, I find these to be the most effective when presenting quantified outcomes of DevOps implementations and adoptions.

Performance

- Uptime

- Resource utilization

- Response time

Quality

- Success rate

- Crash rate

- Open/close rates

Speed

- Lead time

- Cycle time

- Frequency of release

Putting DevOps into action

We covered what DevOps is, where it originated, the problem it solves, the benefits, and how to measure success. Now we’re ready to discuss how to put DevOps into action. This begins by understanding the DevOps lifecycle:

- Build

- Test

- Deploy

- Run

- Monitor

- Manage

- Notify

I’ll expand on products that are used across each phase. However, to do that, I’m going to simplify the lifecycle steps into three phases:

- Develop and test: continuous testing

- Release and deploy: short release cycles

- Monitor and optimize: improved product efficiency

Here are best-in-class products that are commonly found in a DevOps pipeline:

Develop and test – continuous testing

- Confluence

- AccuRev

- JUnit

- IBM Rational

- Fortify

- Visual Studio

- VectorCast

Release and deploy – shorter release cycles

- ServiceNow

- Maven

- Artifactory

- NAnt

- Jenkins

- Bamboo

Monitor and optimize – improved product efficiency

- Zabbix

- Graphite

- Nagios

- QA Complete

- VersionOne

- New Relic

When we think about the aspects of DevOps, we quickly land on what type of tools we can introduce into these environments.

- Development: Visual Studio, Jira, GitLab, Azure DevOps, GitBucket

- Build: Jira, GRUNT (Bamboo plugins), Bamboo SBT, Gradle, and Jenkins

- Test: Visual Studio, TestLink, TestRail, Jenkins, LoadImpact, and JMeter

We also have DevOps tools like Jenkins, Chef, Puppet, and others that provide workflow and workflow automation.

A quick DevOps example

Many of my LinkedIn followers asked for a practical example of how DevOps is leveraged in the wild. Enjoy.

Situation: A new data-science and advanced-analytics team was recently launched that focuses on data literacy to allow access to data that must be validated and published. However, data stewards are reporting data publishing is taking too long and resulting in reporting on old data sets. The opportunity is to get data into the hands of power users (scientists, bioinformatics, and the data community) more quickly. Today, users are reporting slowness in data availability.

Complication: The data isn’t being ingested from a single source. In fact, because of the intelligence generated from the data sourcing, the timing of sources varies based on data timeliness. The team is leveraging a data lake to capture data relating to patient longitudinal, claims, EHR, EMR, and sales data such as IQVIA, Veeva CRM, SalesForce Marketing Cloud (SFMC) that includes promotional activities, general marketing, and financial data.

Resolution: The team developed an entire automation workflow in Jenkins. This resulted in a 42% improvement in data timeliness. Data was refreshed every four days, not every seven days.

Here’s the general approach that was taken to achieve the results:

- Develop + build + test

- Daily scrum – maximum of 15 minutes where each team member can share updates

- Sprint review

- Daily/weekly status updates to client

- Automated tasks to cover:

- Build management

- Automated unit testing

- QA environment configuration

- Code completion

- Code freeze

- Regression testing on preview/stage environment before going live

- Go live

DevOps offers opportunities to get to market faster, decrease cycle times, and ultimately get functionality to your business users more quickly. Decrease your dependence on manual processes and introduce DevOps throughout your delivery pipeline.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Applying demonstrated competency to evaluate remote team performance

Have you ever been told to improve but weren’t sure what to work on? Have you asked your team to do better and improve their performance—and then, a week later, the group wants to have a discussion about precisely what that means?

Hi, I’m Peter Nichol, Data Science CIO.

What is Team Point?

Today, we’re going to talk about a concept I created called Team Point. Team Point is an approach for providing individuals and teams with a quantifiable baseline for performance.

If you’re a team leader and are charged with improving that team, this approach is enormously powerful. The beauty of Team Point is that it offers a method to measure the team’s progress toward a quantifiable objective. No longer will you be introducing techniques and not be able to link them to performance. From now on, every best practice you implement can be measured and quantified to determine your team’s positive (or negative) effect.

What problem are we solving?

Have you ever taken ownership of a new team or joined a new organization? Initially, the role sounded straightforward. Then, you heard rumors of the team not delivering. Stakeholders were getting increasingly frustrated because quality varied from product to product and manager to manager. You’ve started to get more requests for named individuals, resulting in some staff being overallocated and others being under-allocated.

When everything is on fire, where do you start? These fires pop up almost everywhere:

- Deliverables are being missed.

- Issues aren’t communicated.

- Risks aren’t escalated in a timely fashion.

- Critical milestones are delayed, resulting in additional escalations.

- Project meetings have conflicting agendas.

- Stakeholders want team members removed.

When everything is on fire, one thing is for sure—you can’t be running from hot spot to hot spot. That doesn’t work. Here’s what does work.

Choose a framework for evaluation

This hot environment requires an organized and systematic approach. Team Point works by applying an evaluation method of, “show me, don’t tell me.”

It’s essential to choose a framework and identify categories that accurately represent the areas of competency that you’re interested in evaluating. This will vary depending on the role, industry, and domain. I’ll share what I use for project and program management areas to demonstrate competency. I’ve found that these categories—originated from a previous PMI PMBOK version—work exceptionally well to capture the significant elements of project management competence.

Project Management

- Integration management

- Scope management

- Time management

- Cost management

- Quality management

- Human Resource management

- Risk management

- Procurement management

- Stakeholder management

- Communication management

I’ve adjusted some of the competence areas for program managers to make them more strategic and outcome-focused in nature.

Program Management

- Program portfolio management

- Strategy and investment funding

- Program governance

- Program management financial performance

- Portfolio metrics and quality management

- Program human capital management

- Program value and risk management

- Program contract management

- Program stakeholder engagement

- Communication management

The PMI PMBOK 7th edition was released on August 1, 2021. There are valuable pieces that leaders may want to pull from this text. I’ve referenced the eight most functional performance domains:

Performance Domains (PMBOK 7th edition)

- Stakeholders: interactions and relationships

- Team: high performance

- Development approach and lifecycle: appropriate development approach, planning, organizing, elaborating, and coordinated work

- Project work: enabling the team to deliver

- Delivery: strategy execution, advancing business objectives, delivery of intended outcomes

- Uncertainty: activities and functions associated with risks and uncertainty

- Measurement: acceptable performance

It’s critical to select areas of competency that resonate with your executive leadership. Don’t feel you have to use conventional categories. Much of the value of this framework comes from experienced leaders tailoring the areas of competency to their business purpose and desired outcomes.

Identify questions to demonstrate competency

The majority of questions I receive about Team Point is how to administer the approach in a real setting. So, I’ll share a specific example of how this works and why the system is uniquely impactful.

No one wants to be told how to think. The close follow-up to this is, managers always have unique or creative ways of operating that they’ve matured over the years. Some of these techniques are unusual but effective, while others have deteriorated over time. Here are a few examples in practice.

Contact Lists

First—in this case—I sit down with the project manager and ask about how they manage the contacts for the project. The trick is, there’s no “right” answer here, but there are wrong answers. The tool they use to track contacts doesn’t matter. I’m also not checking for the frequency of how often that list is updated. The project manager will either produce a contact list or say why it matters that I know who to contact.

In this case, I’ll pose a question: “You’re on PTO (paid time off), and the business analyst on your project wants to contact the tech lead. Where do they go to find that information?” It’s clear how inefficient communication will be if those business analysts have nothing to reference.

If the project manager is organized, they might have a contact list documented in Confluence. The project manager could have a project list included in a project charter. There may be a SharePoint library with contacts listed. Each of these is a reasonable solution. The goal is to create a reasonable approach for the business analyst to get to that information. Often, I’ll hear something like, “Well, everyone knows how to find that contact information, even if it’s not documented.” I’ll usually reply with, “If we text, Michelle, your business analyst, now, she’ll know how to find the database lead’s cell phone number, right?”

The value here is asking the project manager to show me—not tell me—where the information is located. I’ll have the project manager do a screen share and navigate where the business analyst would find relevant contact information.

If the project manager can’t find the information in five to 10 seconds, it’s a pretty good bet the business analyst would have no idea where to find that contact information.

How does Team Point change behavior?

As leaders gather more experience, they can articulate situations more clearly—even when they aren’t all that clear. A good example is our contact list question. It’s easy to explain how the information would be shared among team members. However, it’s much harder to demonstrate how to set up and execute that process to achieve the desired outcome effectively.

So, how does Team Point change behavior? It measures what matters.

We’re defining the standard upon which each individual and every team member will be measured. Most teams have goals, but ask for clarification of the goal there is no supporting detail. It’s simply a vast wasteland of assumptions.

This is where we add clarity—because being measured against a standard that isn’t defined sucks. It’s also not fair to your team. The standard for good performance should be visible and transparent to everyone on the team. There’s no grey performance in this model. You’re either above the line or below the line.

Using gamification to drive desired outcomes

If there’s not a clear standard for good performance, the team operates in this fuzzy state. They make some effort but never exert all their energy because the objective isn’t clear.

Do you need a contact list? Do you need an architecture plan? Do you need to schedule weekly meetings? The project manager can guess but doesn’t know for sure. Maybe they should know, but perhaps they shouldn’t.

This model defines behavior that’s expected 100% of the time. There is no longer any question about what’s expected. Of course, based on the size of projects, some models require more artifacts or less, but what’s relevant here is, even then, what’s expected is clear. There’s no grey.

Every team member knows their unique score based on their demonstrated competency, and they also know how they’d rate the entire team. This creates healthy competition among team members without adding undo team friction.

How to implement Team Point

Our goal is to provide individuals and teams with a quantifiable baseline for performance. To administer the evaluation, the same questions need to be asked of each individual in a similar role. For example, all project managers should have the same (or a very similar) set of questions. The same logic applies to program managers. Therefore, all program managers should receive the same questions. This ensures that results can be aggregated and rolled up smoothly.

Here’s how you start to roll out Team Point for your group:

- Choose categories for evaluation

- Identify your questions related to each category

- Determine acceptable answers

- Conduct the Team Point assessment

- Report individual and team results

You want managers to think, so, explain what you’re looking for, not how to produce those results. For almost every question, there are multiple approaches to the answer that are acceptable.

How to measure and report results

There are two levels of reporting results: individual and team.

The individual results are specific to that resource and address their areas of strength and weakness. The team results show rolled-up performance and represent the directional performance of the team.

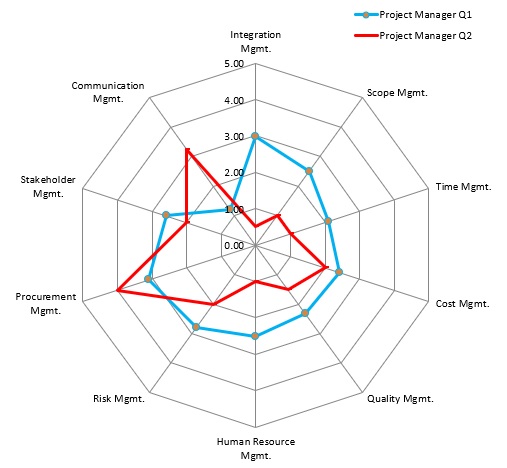

Illustration 1.0 Individual Performance

Individual performance is always accompanied by detailed explanations of how the individual performed compared to the team, on average. This helps an individual understand precisely how they’re performing in relation to their peers. While the below percentages in the below example don’t represent the graphic above, when distributing individual results, the graph and the percentages would match.

- Integration management: 33% vs. 55%

- Scope management: 78% vs. 45%

- Time management: 78% vs. 32%

- Cost management: 78% vs. 76%

- Quality management:. 43% vs. 11%

- Human Resource management: 44% vs. 33%

- Risk management: 87% vs. 51%

- Procurement management: 51% vs. 42%

- Stakeholder management: 86% vs. 67%

- Communication management: 13% vs. 23%

Team Point is powerful in that you’re able to show individual performance charted over team performance. So, for example, you’d compare Q1 versus Q2 performance of a particular project manager.

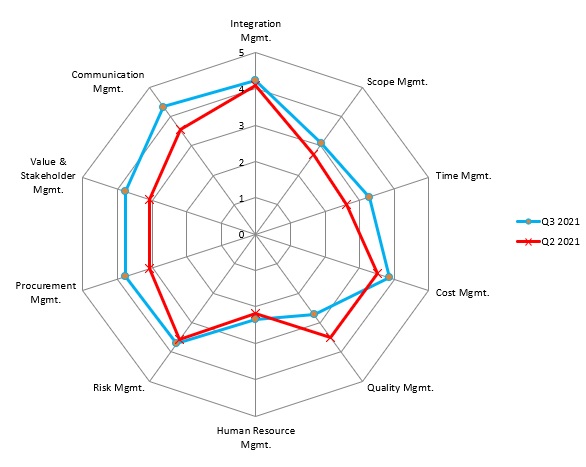

Illustration 2.0 Team Performance

You’d also report team performance quarter over quarter. This helps to illustrate directional progress and precisely measures any best practices or elements you, as a leader, introduced that might improve team results.

This gives resources a view of where they are individually, how they rank against the team as a percentage of effectiveness, and where they are compared to the previous quarter.

Why it’s so powerful

This model translates soft activities into complex outcomes. For example, let’s assume that, over the quarter, you made some adjustments to the team:

- The lifecycle steps were reduced.

- The team was retrained on financial management.

- Maintenance of artifact documentation was transitioned to a project coordinator.

- An intern model was introduced, and two new interns are assisting senior-level project managers.

- The project management meeting structure was optimized.

How do you measure any of these benefits? It’s possible, of course, but it’s extremely challenging. Using Team Point, you can link the best practices you implemented to a demonstrated competency model that can be quantified.

Hopefully, these insights offer a new perspective on using Team Point to precisely pinpoint where your team needs to improve and, similarly, where they’re performing strongly.

If you don’t subscribe to my newsletter, please do so at newsletter dot data science CIO dot com. I share insights every week to a select group of individuals on new concepts in innovation and digital transformation that I’ve been made aware of and love to share.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!

Using NLP to expand the democratization of data

How are you leveraging automation in your organization today? Which parts of the company are optimizing artificial intelligence to enable a better customer experience?

Hi, I’m Peter Nichol, Data Science CIO.

Today, we’ll talk about natural language processing (NLP) and how it can help accelerate technology adoption in your organization.

Neuro-linguistic programming (influencing value, beliefs, and behavior change) and natural language processing (helping humans better interact with machines or robots) are separate concepts, both of which are identified by the NLP acronym. Make sure you’re clear about which one you’re talking about.

Again, today we’ll be discussing natural language processing.

What is NLP?

NLP is the combination of linguistics and computer science.

Essentially, NLP helps computers better understand what we humans are trying to say. The application of NLP makes it feasible for us to digest, test, and interpret language; measure sentiment; and, ultimately, understand what exactly we mean behind what we’re saying. NLP incorporates a dynamic set of rules and structures that enable computers to accurately interpret what’s said or written (speech and text).

How is NLP being leveraged?

There are many ways in which we see NLP being adopted by organizations. First, we have chatbots and other customer-based tools that allow consumers to interface and interact with technology. In more simplistic terms, the application of NLP helps computers recognize patterns and interpret phraseology to understand what we, as humans, are attempting to do. There are many great examples of NLP in use today; here are a few of my favorites:

- Siri and Google assistance

- Spam filtering

- Grammar and error checking

Siri translates what you say into what you’re looking for by utilizing speech recognition (translation speed) and natural language processing (interpretation of a text). So, for example, NLP can understand your unique voice timbre and accent and then translate this into what you’re trying to say. Google Assistant is another excellent example of speech recognition and natural language process working in tandem.

Spam filtering uses NLP to interpret the type of outcome expressed and recognizing patterns of expression and through processes in the text. In this way, spam filtering uses NLP to determine if the message you received was sent from a friend or from a marketing company.

Grammar-error checking is an excellent use of NLP and super helpful. NLP references a massive database of words and phrases compiled from these use cases and compares what’s entered with that database to determine if a pattern has been used previously.

Text classification looks at your email and makes judgments based on text interpretation. If you’ve ever paid attention to your Gmail, you might have noticed your email is categorized in several ways. For example, you’ll have your primary email in one folder, and you’ll have spam and promotional emails in another. Unfortunately, you didn’t make the delineations; an NLP agent did.

NLP is behind all that type of stuff. It’s artificial intelligence. Essentially, it’s looking at what you say, what text is being written, and interpreting what you mean. As NLP adoption grows and is brought into mainstream business software, we realize there’s much potential to leverage NLP in our environments. The potential of NLP is powerful, especially when we begin to focus on automation and, ultimately, data or technology adoption.

NLP’s role in data democratization

Focusing on data democratization is an excellent example of how NLP can make its mark. Many CIOs and leaders are building data-driven cultures and striving to help raise the data awareness of employees about how to leverage and optimize data, provide insights, and determine and interpret analytics from more extensive data sources. But that’s not always possible.

A lot of the data we use—whether social media or other types of generic input, even voice—is unstructured. It doesn’t fit in well with traditional databases comprising columns and rows. This data is unstructured and, as a result, we need different ways to interpret it.

Historically, we needed particular individuals that could interpret and understand how that unstructured data could be aggregated, cleansed, and, finally, provided to consumers. Today, with data democratization, we’re trying to access tools and technologies that make interpretation fast and seamless. In addition, data democratization attempts to get everyone in the organization comfortable with working with data to make data-informed decisions.

Why does data democratization matter when building a data-driven culture? First, a significant part of building a data-driven culture is offering greater access to the data. This means individuals who otherwise might not have access to that data set can now execute queries, gather correlations, and generate new insights.

The first step toward building that data-driven culture is ensuring that you’ve captured intelligent and valuable data. Next, consider adding additional automation into your ecosystem and explore new ways to work with your business applications.

NLP has the potential to accelerate your most critical initiatives. Take time today to discover which of your approved initiatives could benefit from NLP.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!

Building in operational resilience with managed service providers

Which activities do you do today that you should probably delegate? Similarly, which activities does your department own that probably should be delegated?

Hi, I’m Peter Nichol, Data Science CIO.

Today we’re going to talk about managed service providers or MSPs.

Why make the shift to MSPs?

The concept behind a managed service provider is to shift ownership of daily activities to allow your team to focus on more important strategic priorities.

The concept sounds pretty simple. However, telling the team that 90% of what they did yesterday is going to be outsourced is tough. However, if we go back to basics, the principle of leveraging MSPs makes sense.

Consider for a minute what activities in your own life you delegate. Maybe you outsource lawn care to the neighbor kids or hire a cleaning company to keep the vacation property looking amazing. In both cases, you’ve decided that your time is better spent somewhere else—you can add greater value by doing a different type of activity. This is the same business case your organization is making. Your team’s time is better spent on building out core organizational capabilities, not focusing on non-essential organizational capabilities.

The case for MSPs in our business

Similarly, when we perform our organizational duties, we often take ownership of pieces that fall through the cracks over time. We tell ourselves it’s necessary to address the immediate need—for example, a frustrated stakeholder or client. Then, in the light of being a good corporate citizen and helping our peers, we shoulder this additional responsibility. We might have developers take greater ownership of application compliance because security is understaffed, or we might have project managers start architectural models because the architecture review board isn’t mature. In both cases, a team has acquired new responsibilities that, on reflection, shouldn’t be owned by those teams.

When engaging a managed service provider, the objective isn’t to transfer busywork to an outside company. If activities don’t add value, they should be challenged and stopped. However, we do want to transfer activities that can be more effectively done by a third party or by experts.

It’s essential to start identifying activities that your department has picked up ownership of over the last six or nine months. Call out activities, processes, and outputs that your team realizes you shouldn’t own and that need to be transferred back to the original department or group.

Set up a discussion with the department’s leaders for knowledge transfer. Communicate that six months have gone by, and call out the functions that need to be reabsorbed into your peer’s organization.

Focus on the most significant impact on your business

When we engage MSPs, we’re talking about shifting ownership of areas that add value but aren’t core competencies.

- What are the core competencies of your business?

- What are the most important things to achieve?

- What drives the most significant value for your organization?

There will be areas that drive benefits but that aren’t the most important for your organization. Ask yourself, “If we become number one in this capability, will that become a competitive advantage?” Often, what are considered non-essential capabilities depend on your core business model.

- If you’re a healthcare provider, most likely marketing isn’t your top priority.

- If you’re a software company, probably billing isn’t your top priority.

- If you’re an architecture company, you’re probably not focusing on IT support and running a help desk.

Look at your core business model to discover how your business can effectively leverage the benefits of a managed service provider to enhance operational or day-to-day activities. Of course, these operational activities are essential to keep your business running, but you wouldn’t consider them to be core capabilities for your business.

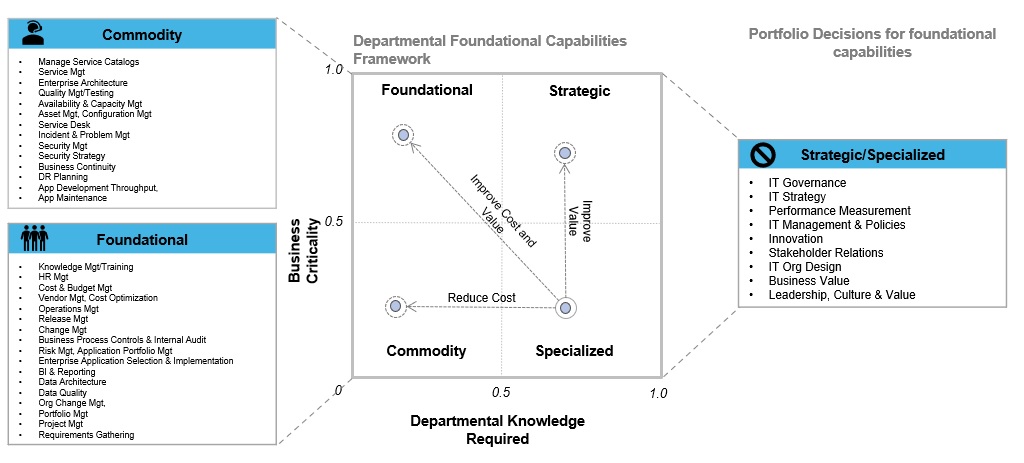

Illustration 1.0 Foundational vs Commodity Capabilities

The benefits of MSPs

There are a lot of benefits when utilizing the services of MSPs.

First, we have the benefit of expertise. In theory, this is going to be a provider who has deeper and broader expertise than your current team.

The second benefit is predictability and, more specifically, financial predictability. Instead of having costs just being incurred, you now have predictable financial spend monthly, quarterly, and annually. As opposed to anticipating all the variable costs, your provider can offer insights into when unplanned expenses are likely to be incurred. The provider can use their expertise to predict the demand ebbs and flows to utilize resources and mitigate risk more efficiently.

Third, you have the benefit of the provider anticipating unknown costs. Because the managed service provider has other clients in various industries and sectors, they can predict changes in market conditions that your internal team might not be familiar with.

Environmental changes or the introduction of new models—such as a change to the licensing fee structure—are something that MSPs can help your team stay ahead of. Here are some additional benefits of leveraging MSPs:

- Better visibility over contract spend

- More predictable costs

- Enhanced service quality

- Improved automation

- Great simplicity

- More demand flexibility

- Expanded scalability

- Greater skill and expertise

- Broader on-call coverage for unplanned events

- Standardized security models for data

- OpEx reduction

- Reduced training

- Lower break-fix ratio

Which areas benefit the most from MSPs?

Let’s concentrate on three areas in which managed service providers are most likely to be effective.

Application maintenance

Third-party application maintenance is a huge part of IT’s annual operating budgets. By leveraging managed service providers, these costs can typically be decreased anywhere from 25% to 50%. This allows for initial maintenance-cost spend to shift and for the funding of emerging technology and innovation pilots. Without a high-performance team, managing legacy systems can be very costly, often requiring multiple resources, all with specializations.

Network and operations

Network and operations are essential to most businesses. However, they’re also relatively stable once established. The cost to maintain these environments can vary wildly. For example, upgrading the mobile platform for an extended range can be a complex initiative. Yet, once that project has been completed, those resources benefit virtually idle until the next project. This is a great business case for leveraging MSPs to augment existing infrastructure teams.

Usually, network and operations functions operate effectively unless additional capacity is required.

IT help desk

Virtually every business needs standard IT support. This covers things like ordering new laptops and mice for existing and new users. Users want someone to call when their computer doesn’t work or they can’t log in to the VPN. These calls, while important, aren’t strategic. These non-essential functions are great candidates for off-loading to an MSP.

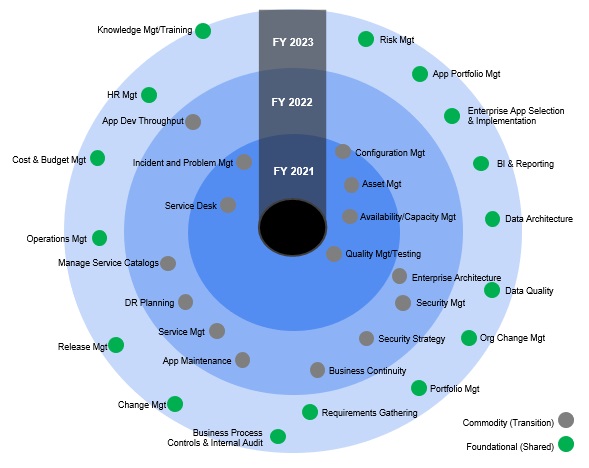

Illustration 2.0 Capabilities Timeline

What metrics matter?

While there’s an almost unlimited combination of metrics that help to drive MSP performance, here are the metrics I’ve found over the years to be most effective:

- Customer satisfaction score

- First-call resolution

- Escalated tickets

- Resource utilization

- Newly discovered applications, devices, processes

- Contract renewals

- Rate compliance/service level agreement (SLA) compliance rate

- Churn rate

- Mean-time to resolve (MTTR)

- Cost per ticket

- Effective hourly rate (total cost divided by hours worked)

- Tickets open by type

- Average time to acknowledgment

- Average time to resolution plan/total time to resolution plan over full tickets x100

- Kill rate of tickets = closed tickets/tickets open

Make sure hard metrics make it into your MSP contract. It’s much easier to discuss metrics versus having a conversation about why folks feel expectations aren’t being met.

How to select the best MSP

Hiring an MSP can be confusing. It’s a huge decision, and if the help desk doesn’t work on day one, the transition can be embarrassingly loud. So, here are the key steps to select the best MSP for your situation:

- Confirm domain expertise. Focus on engaging a vendor with domain expertise—not just in your vertical industry but in the space in general. For example, if you’re outsourcing the IT help desk for an energy company, make sure the vendors have experience managing help desks and have knowledge of the energy sector.

- Ask for references. Requesting references is a must. We all know references can be manufactured or made up and typically are. However, it’s still important to talk to somebody who was a client of the MSP to gain a better understanding of their quality of service.

- Validate an anticipatory culture. Make sure the MSP company culture supports planning ahead rather than having a reactive mindset. The MSP must be capable of staying ahead of changing business conditions and not have a habit of chasing significant changes.

- Focus on value over hours. Take time to construct your MSP contract to be framed around outcomes and value realized, not hours billed.

- Use performance measures to determine success. When designing the delivery structure, take care to develop service level objectives (SLOs), operational level objectives (OLOs), and business level objectives (BLOs) to measure and validate performance.

- Match the organizational structure to demand. Too often, we focus on the contract and the terms and miss the big picture. It’s imperative to ensure that you’re not losing support by shifting to MSPs. For example, if you have 20 top resources supporting a capability, and the MSP suggests aligning four, full-time, equivalent resources, that’s not good. It’s clear this level of support—no matter how good the resource quality—won’t match five times the resource volume. You’re simply not going to get the same level of performance from 20% of the resources you have on the ground today. In this case, either dial up the resource requirements or drastically shift delivery and performance expectations for the new team.

I’m glad to share the different approaches when considering engaging a managed service provider. If you transition effectively, you’ll provide even more efficient and effective services for your business partners.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!

Capacity-based funding for the modern product team

How are your teams forecasting work? How do you anticipate a budget when you’re not sure what you’re going to spend in a fiscal period?

Hi, I’m Peter Nichol, Data Science CIO.

Today we’re going to talk about capacity-based funding. This is a concept that many have heard of but few leaders execute effectively.

What is capacity-based funding?

Capacity-based funding is more agile and iterative than project-based funding and uses team size rather than project size to determine funding parameters.

To better understand capacity-based funding, we’ll use project-based funding as a reference. Project-based funding is the funding of a single initiative. You have an annual review cycle in a project-based funding model, almost like an annualized budget cycle. Many leaders would argue that not only is project-based funding inefficient, but it never did work.

There are three main areas where capacity-based funding differs from project base funding. First, it has a more extended period of estimation. In this new model, we’re funding a product team. This is a group that’s focused on a strategic or a multi-year initiative. This funding is also provided for a longer time—typically, 12, 14, or 18 months. Second, you don’t measure the costs, and you’re also not calculating the actual spend over time. Dropping these labor-intensive tasks frees up the team to focus on the actual product work and high-value-added activities. This shifts the focus away from operational tasks toward activities that directly add value to our product. Third, teams operate autonomously. The team members work in their little sphere and are focused on delivering a product. The team doesn’t ask for permission. They don’t escalate questions about the direction or which feature is more important. These teams are decision-makers. They analyze, interpret information, decide, and move on. Operating on their principles and norms removes much of the bureaucratic red tape that traditionally slows down high-performing teams.

The influences that press on capacity-based funding models

As soon as leaders hear that costs aren’t tracked, other questions get raised—mainly, “How do we influence the outcome and performance of teams using a capacity-based funding model?” There are a few different questions to ask to determine this:

- How much?—i.e., work volume or total hours

- How often?—i.e., work priority

- How many? i.e., team size

The first thing to determine is how much. Here we’re talking about the total work covered by the team. Another way to look at “how much?” is to consider the total hours of work (our capacity) over a given period. Often, this period extends over six months or a year. The second thing to know is how often. What’s are the frequency parameters under which the team will operate? Is this a team that meets once a year for three weeks, or is this team funded through the entire year? The concept of frequency is tightly coupled with the idea of priority. What’s the priority for the work the team is expected to deliver? The third determination is how many. This question refers to how large the team is. What’s the size of the team and the number of dedicated resources? What’s in scope (how much) and what’s the priority (how frequent) that will drive the team size (how big)?

What are the challenges with capacity-based funding?

With these benefits, it would be easy to assume that capacity-based funding is a utopian model and that everybody should move to this model. Well, it’s not all roses. There are several reasons why leading product initiatives with capacity-based funding models is difficult for executive leaders. Here are a few of the big hitters:

- Work delays are easier to hide.

- Shifts in priorities have a cost.

- Buckets of funds are harder to see than in a focused project.

- There may be a shift in portfolio leaders to manage new factors.

First off, it’s tough to identify work delays using this model. If you have project funding, it’s usually funded for a defined scope. However, with capacity-based funding, elements could be in scope, quickly determined out of scope, and then included back into the initiative’s scope. With this scenario, it’s tough to determine the original state of the ask and what the decision-making was to get to our current scope. There’s no way to track changes in a capacity-based funding model, unlike the change management we have in conventional project-based funding. The concept doesn’t exist because we’re not tracking expenses, and we’re not tracking the effort—or, more specifically, the dollars involved in that work product. So not having the specificity can be a big challenge if leadership continues to ask for the deltas; i.e., how we got from there to here.

Second, we aren’t tracking any financial spend. This results in not having a low-level (line-item level) of financial details to explain variances. For example, if leaders want to know how much functionality “A” costs to cost allocate to a business unit, we won’t have that detail. Of course, the team could develop some assumptions, but none of these will be tied to real numbers. Now, if leaders need that information tracked, it can be followed. But, if that’s the case, you’ve chosen the wrong tool for your financial planning. The absolute truth is that you’re not in an agile environment. You’re operating a waterfall methodology and pretending to be agile. I’ve been a leader operating in these types of environments, so take it from me: This makes everyone’s life miserable. This hybrid model is the worst of both approaches. It saps energy from the team and slows down team velocity.

Third, the team must have the autonomy to operate. This requires less—not more—oversight on the team. This also introduces more leadership challenges depending on the team members. This model works great for high-performing teams that gel and work well together, but it creates problems for younger and more immature groups.

How can portfolio leaders get ahead?

As we start to get into the details, we quickly run up against how we lead these opportunities. There are three strategies I want to share to optimize teams using capacity-based funding models.

Time series analysis

Time series analysis helps to analyze how long each segment takes to complete. By using this approach to forecast future performance, you’re able to determine the efficiency of your overall process.

Pipeline analysis

Pipeline analysis brings in the cycle time for all the steps of the process. This analysis offers insights about specific pieces that make it through the pipeline and those that don’t. This step helps to provide a mid-point check of the efficiency of the flow or value stream.

Value leakage

Value leakage identifies where value was lost. Quite often, we’re managing pipeline throughput (outcomes) and interim milestones. This could be process gates or milestones in the middle of our plan. Unfortunately, if we only focus on the end game, we miss areas where value dripped out of our process.

It’s excellent if your process is efficient. However, ultimately, we need to measure the cadence (speed) and outcomes (value delivered) resulting from our process.

Now you’re empowered with new insights and tips for managing a capacity-based funding model.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!

A testing paradigm to fast track the availability of business functionality

When you do root-cause analysis, you always discover functionalities missed in testing or defects that were put into production that could have been identified earlier in the process.

Hi, I’m Peter Nichol, Data Science CIO.

What does shift left mean?

Today, we’re going to talk about the concept of “shifting left and shifting right.” Then we’ll cover the “shifting in reverse” theory. All right, I made up the “shifting in reverse” theory. Shifting left and shifting right are what we’ll explore today.

Shifting left is a concept that was introduced within the last 10 years or so, but it originated in the 1950s when IBM introduced the Harvard Mark I supercomputer, also known as the IBM Automatic Sequence Controlled Calculator (ASCC). The level of technological advancement at the time was a huge deal. This computer cost about $5.7 million in today’s dollars, and it weighed 9,445 pounds or 4.7 tons. It also came with over 500 miles of wires, three million connectors, and over 35,000 contacts. This was an extremely complicated computer that took years to design, assemble, and build fully. Nevertheless, the computer was a success, and it was pretty powerful for its time.

How shift left originated

While the computer was powerful, developers didn’t have an easy time working with this massive machine. If developers had a code mistake, they had to rerun their code (cards), and rerunning code was expensive because the cost of computing was expensive. A concept was developed to move testing to earlier in the process to decrease risk and lower costs. Hence, the idea of shift left was coined and began to take hold.

What are the advantages of shift left?

There are three significant benefits in adopting a shift-left technology culture.

The first is early detection of failure points. By testing earlier in the lifecycle, developers can identify defects, code breaks, and problems with logic earlier in the lifecycle. This leads us right into our second benefit, which is cost avoidance. When we test earlier in the process, we reduce the cost of fixing defects and decrease the likelihood of extensive remediation efforts. The third benefit is that we can get functionality into production much more effectively and sooner because we can reduce the lifecycle. Shift-left delivery models also offer benefits such as:

- Developers test their code

- Modularized functions

- Co-location of developers and testers

How does shift right fit into the mix?

This leads us to the concept of shifting right. Shifting left—testing earlier in the process—seems like common sense. It just sounds reasonable.

When we look at shifting right, the logic seems less clear. Why would we shift right when we just put all this time and effort into shifting left? Also, why shift right at all?

The concept of shifting right isn’t mutually exclusive from the idea of shifting left. Surprisingly, the concepts are complementary.

What are some excellent examples of shifting right?

The idea behind shifting right is that once functionality goes into production, no additional testing is performed. That functionality is, in essence, forgotten. From there on, we assume the functionality is working as expected. Then, of course, new functionality is tested immediately post-deployment. However, here we’re talking about a week or two later. Does your IT team continue to test after that two-week window? Doubtful. We assume that there won’t be defects after that point and, if there are, the business users will discover and report them.

Do we want our business partners and customers to find defects and problems with production? Hell, no. We want to fix them proactively before our business partners identify those problems. This is precisely where the shift right mindset adds value. I’ll explain the three main concepts behind shift right briefly, including A/B testing, dark launching, and chaos testing.

- A/B testing: This concept looks at the initial design requirements and ensures that they work in production.

- Dark launching: This is the process of unofficially slowly releasing functionality to production. The key here is that the functionality being released isn’t officially announced to users. LinkedIn is well known for leveraging this approach as they release functionality to select groups of influencers. Functionality appears on a page and is immediately available. Members can either leverage the new features or not. However, rarely are they released to all members.

- Chaos testing: This is the idea of making sure we have unplanned test scenarios as part of our test plan. Chaos testing can involve simulated stress testing or injecting other types of errors or defects into the mix. For example, we might deliberately get the system to throw errors, inject rare scenarios, or run some edge-case scenarios when trying to break a piece of functionality that didn’t handle the exception effectively.

Here are some additional examples of shift-right concepts:

- Canary testing

- Continuous quality monitoring (CQM)

- Code instrumentation

- Production user monitoring

- Production testing

As you race into your workweek, ask yourself, “Am I leveraging the shift-left methodology? Am I testing as early in that lifecycle as possible?” Once you have those answers, you secondarily ask, “Am I shifting right? Do I have controls in place to test and retest functionality after it’s in production?”

Save your users the stress of stumbling into production defects. Instead, start finding defects before your business partners do.

If you found this article helpful, that’s great! Also, check out my books, Think Lead Disrupt and Leading with Value. They were published in early 2021 and are available on Amazon and at http://www.datsciencecio.com/shop for author-signed copies!

Hi, I’m Peter Nichol, Data Science CIO. Have a great day!