Strong Artificial Intelligence (AI).

Recursive self-improvement.

Exponential growth.

Technology singularity is a hypothetical event where by leveraging artificial general intelligence (known as ‘strong AI’) a computer could theoretically be capable of recursive self-improvement (resigning itself) – building a computer better than itself.

Applying recursive improvements to big data means that structures unknown to humans today, could be created within a decade. Applying recursive improvements to analytics means that correlations that today have to be linear, tomorrow could be non-linear and although appearing seemingly unrelated, in fact have extreme distance connections. Applying recursive improvements to biometric sensors could create new unique identifying characteristics current unknown an unmonitored. This opens possibilities that through enabled smart devices we are able to ascertain new ways of establishing identity such as gait analysis (someone’s walking style, formed through wearable device data recorded in the last 30 seconds).

Superintelligence

Futurist Ray Kurzweil, the principal inventor of the first charge-coupled device flatbed scanner – the first omni-font optical character recognition – the first print-to-speech reading machine for the blind – the first commercial text-to-speech synthesizer, believes that singularity will occur around 2045. Vernor Vinge, argues that artificial intelligence, human biological enhancement, or brain–computer interfaces could be possible causes of the singularity and that singularity would occur sometime before 2030.

Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended — Vernor Vinge

In Vinge’s 1993 article ‘The Coming Technological Singularity’ he explains that once true superhuman artificial intelligence is created, no current models of reality will be sufficient to predict beyond it. When will the era of the robots start? It will be shortly after the death of the recommendations engines. A recommendation engine (recommender system) is a tool that predicts likeness (may like, may not like) among a list of given items. These preference recommendations could be around books, software, travel, and many other areas. This however is not artificial intelligence (AI); this is a recommendation engine. A recommendation engine uses two pieces of known information, leveraging typically either collaborative filtering (arrives at a recommendation that’s based on a model of prior user behavior) or content-based filtering (recommendations on the basis of a user’s behavior, e.g. historical browsing) to determine your likes or dislikes.

In contrast artificial intelligence, takes something known and creates something unknown.

Netflix uses a form of machine learning, a subfield of AI, that produces results for learning, prediction and decision-making. Collaborative filtering, drives the Netflix engine, commonly used for research in combination with the Pearson correlation. The Pearson correlation measures the linear dependence between two variables (or users in this case) as a function of their attributes (Jones, 2013). Many algorithms become less reliable as the population sample grows exceptionally.

The Pearson correlation sifts down the sampling population to neighborhoods based on similarity (reading the same books, traveling to the same locations).

This approach produces targeted predictions that are accurate within a small population sample, while leveraging the population data, and are relevant for a subsection or neighborhood of users.

Turing Test Evolves

John McCarthy, cut the term ‘Artificial Intelligence’ in his 1955 proposal for the 1956, Dartmouth Conference. He also invented the Lisp programming language. Until 1956 this space was referred to as machine intelligence. When the conversation moves to the topic of AI, it’s not long before talk of the Turing Test arises. Alan Turing in his 1950 paper “Computing Machinery and Intelligence” (Turing, 1950; p. 460) was first published in Mind (a British peer-reviewed academic journal currently published by Oxford University Press on behalf of the Mind Association). It was within this seminal paper that the concept of what is now considered the Turing Test (TT), was introduced. The TT involves three participants in isolated rooms: a computer (which is being tested), a human, and a judge (also human). Typing through a terminal the computer and the human both try to convince the judge that they are human. The computer is the winner when the judge can’t consistently tell which is which. This is the defacto test of artificial intelligence.

Stevan Harnad, a cognitive scientist, contends that the TT has evolved since 1965 and today’s Turing Test asks the question:

“Can machines do what we (as thinking entities) can do?”

Harnad also suggests that this test is not designed to trick the judge that a computer is a human, but rather establish AI’s empirical goal to generate human scale performance capacity. The Turing Test represents what the science of AI intends to do – until then AI remains a machine. The term ‘intelligence’ will only be bestowed to a computer, after successfully passing the TT test.

Technological Singularity

George Rebane frames this well, in his 2010 article, “Singularity? What’s that?” by stating that, “the event when machines reach par intelligence with humans is known as the technological singularity, or simply the Singularity.” The concept of singularity was named by mathematicians and author Vernon Vinge because singularity in physics and mathematics are ‘points’ beyond which is undefined and a new state where normal rules no longer can be applied.

Consider the advancements of digestibles, wearables, and internables that even 20 years ago were basically dreams of weirdo techies and sci fi-enthusiasts. How has the advancement of distributed systems and distributed processing transformed industry over the last 10 years? Each of these ideas were previously undefined.

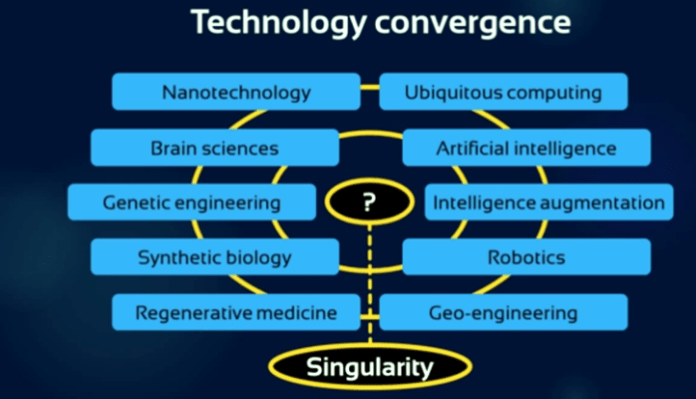

The below chart does a good job of illustrating the concept of singularity.

This abstraction of Singularity becomes a form of je ne sais quoi arriving at the future of man’s innovation.

Moore’s Law

Does Moore’s law demonstrate a valid path towards the realization of singularity? Gordon Moore, co-founder of Intel and Fairchild Semiconductors, made the observation that the number of transistors in a dense integrated circuit doubles approximately every two years.

Moore’s law actually isn’t a physical law or natural law but rather the projection of a future state.

Based on Moore’s law the semiconductor manufacturing process met a 14nm node before 2014, but would need to create a 10nm node by 2016-2017, to ensure the rule still holds. Intel released papers about their 14nm node technology in February of 2015. However, the first Intel chip with 10nm technology is planned to be released late 2016 or early 2017 (Anthony, 2015). Will the 10nm node be created in time to maintain Moore’s Law? As of today, it’s looking pretty good for Intel.

Step Off That Ledge

The degree of dichotomy of the future states between Vinge and Miguel Nicolelis, a top neuroscientist at Duke University, is almost comical. Nicolelis says that technological singularity is a ‘bunch of hot air’ and that computers will never create a human-like brain.

Maybe this is all just fatuous or maybe we are exploring the edges of the new innovation of man.

References

Anthony, S. (2015). Intel forges ahead to 10nm, will move away from silicon at 7nm | Ars Technica. Retrieved November 29, 2015, from http://arstechnica.com/gadgets/2015/02/intel-forges-ahead-to-10nm-will-move-away-from-silicon-at-7nm/

David Wood. (2013). The Lead Up to the Singularity (online image). Retrieved November 29, 2015, from http://www.33rdsquare.com/2013/09/david-wood-on-lead-up-to-singularity.html

Harnad, S. (1992). Connecting Object to Symbol in ModelinIn: A. Clark and R. Lutz (Eds) Connectionism in Context Springer Verlagg Cognition., 75–90.

Jones, M. T. (2013). Recommender systems, Part 1: Introduction to approaches and algorithms. Retrieved November 29, 2015, from http://www.ibm.com/developerworks/library/os-recommender1/

Rebane, G. (2010). Singularity? What’s that? (w/appendix) – Rebane’s Ruminations. Retrieved November 29, 2015, from http://rebaneruminations.typepad.com/rebanes_ruminations/2010/04/singularity-whats-that-wappendix.html

Turing, Alan (1950), “Computing Machinery and Intelligence”, Mind LIX (236): 433–460, doi:10.1093/mind/LIX.236.433, ISSN 0026-4423, retrieved 2008-08-18

Peter Nichol, empowers organizations to think different for different results. You can follow Peter on Twitter or on his blog. Peter can be reached at pnichol [dot] spamarrest.com.